This course is run at the The University of Nottingham within the School of Computer Science & IT. The course is run by Graham Kendall (EMAIL : gxk@cs.nott.ac.uk)

Using fixed partitions is a simple method but it becomes ineffective when we

have more processes than we can fit into memory at one time. For example, in

a timesharing situation where many people want to access the computer, more

processes will need to be run than can be fitted into memory at the same time.

The answer is to hold some of the processes on disc and swap processes between disc and main memory as necessary.

On this page we look at how we can manage this swapping procedure.

Just because we are swapping processes between memory and disc does not stop us using fixed partition sizes. However, the reason we are having to swap processes out to disc is because memory is a scare resource and, as we have discussed, fixed partitions can be wasteful of memory. Therefore it would be a good idea to look for an alternative that makes better use of the scarce resource.

It seems an obvious development to move towards variable partition

sizes. That is partitions that can change size as the need arises. Variable

partitions can be summed up as follows

· The number of partition varies.

· The sizes of the partitions varies.

· The starting addresses of the partitions varies.

These make for a much more effective memory management system but it makes the process of maintaining the memory much more difficult.

For example, as memory is allocated and deallocated holes will appear in the memory; it will become fragmented. Eventually, there will be holes that are too small to have a process allocated to it. We could simply shuffle all the memory being used downwards (called memory compaction), thus closing up all the holes. But this could take a long time and, for this reason it is not usually done.

Another problem is if processes are allowed to grow in size once they are running.

That is, they are allowed to dynamically request more memory (e.g. the new statement

in C++). What happens if a process requests extra memory such that increasing

its partition size is impossible without it having to overwrite another partitions

memory? We obviously cannot do that so do we wait until memory is available

so that the process is able to grow into it, do we terminate the process or

do we move the process to a hole in memory that is large enough to accommodate

the growing process?

The only realistic option is the last one; although it is obviously wasteful to have to copy a process from one part of memory to another - maybe by swapping it out first.

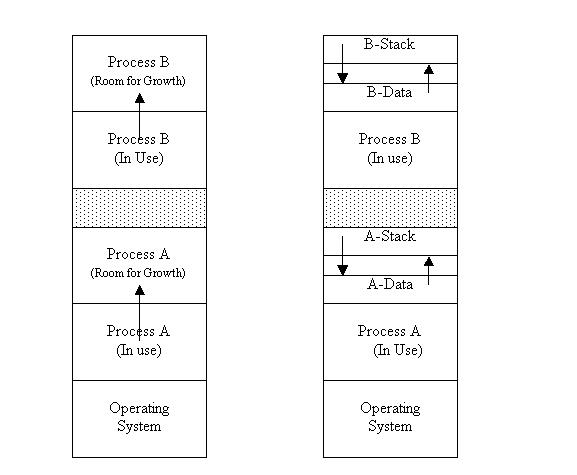

None of the solutions are ideal so it would seem a good idea to allocate more memory than is initially required. This means that a process has somewhere to grow before it runs out of memory (see below).

|

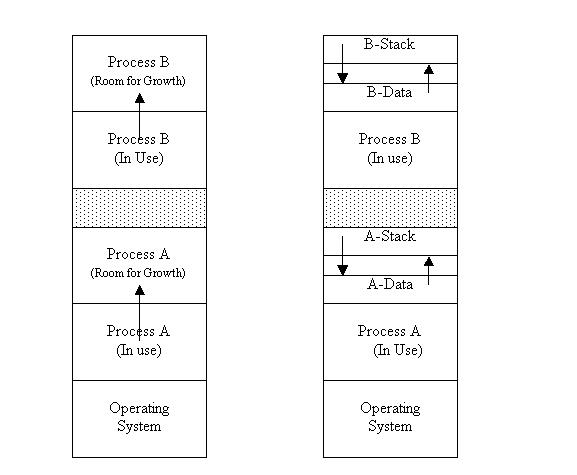

Most processes will be able to have two growing data segments, data created

on the stack and data created on the heap. Instead of having the two data segments

grow upwards in memory a neat arrangement has one data area growing downwards

and the other data segment growing upwards.

This means that a data area is not restricted to just its own space and if a process creates more memory in the heap then it is able to use space that may have been allocated to the stack (see the right hand figure below).

In the next few pages we are going to look at three ways in which the operating

system can keep track of the memory usage. That is, which memory is free and

which memory is being used.

| Last Page | Back to Main Index | Next Page |

Last Updated : 23/01/2002