NEWS:

01/08/2017: New AU Survey now available in Transactions on Affective Computing!

19/01/2017: Our paper on automatic gestational age estimation using computer vision won the best paper award at Face and Gesture Recognition 2017, Washington DC, USA.

19/01/2017: Matlab demo code for the Dynamic Deep-Learning AU detector presented in Shashank Jaiswal's WACV paper (attaining highest score on FERA 2015) now available.

06/01/2017 A great output of ARIA-VALUSPA is our multimedia annotation tool NoVa, addressing limitations of Anvil/ELAN. Nova comes with integrated support to replay interactions with ARIA-Agents, and has integrated support for cooperative learning.

06/01/2017 New ARIA-Valuspa Platform (APV 2.0) is out now, the second public release of our Virtual Human framework. APV 2.0 was developed as part of the EU Horizon 2020 project ARIA-VALUSPA. It comes with a tutorial on how to build your own virtual human scenario with our platform.

20/11/2015: Shashank Jaiswal's WACV paper has attained the highest scores on the FERA 2015 AU detection sub-challenge using a dynamic Deep Learning architecture, combining shape, appearance, CNNs and LSTMs. See results below:

16/11/2012: AVEC test labels publicly available: Now that AVEC 2012 has concluded successfully, we are releasing the test labels for both AVEC 2011 and 2012. Please download them from the AVEC database site using your existing account.

Michel Valstar is an Associate Professor at the University of Nottingham, School of Computer Science, and a researcher in Automatic Visual Understanding of Human Behaviour. He is a member of both the Computer Vision Lab and the Mixed Reality Lab. Automatic Human Behaviour Understanding encompasses Machine Learning, Computer Vision, and a good idea of how people behave in this world.

Dr Valstar is currently coordinator of the H2020 LEIT project ARIA-VALUSPA, which will create the next generation virtual humans. He has also recently been awarded a prestigious Melinda & Bill Gates foundation award to automatically estimate babies' gestational age after birth using the mobile phone camera, for use in countries where there is no access to ultrasound scans. Michel was a Visiting Researcher at the Affective Computing group at the Media Lab, MIT, and a research associate with the iBUG group, which is part of the Department of Computing at Imperial College London. Michel’s expertise is facial expression recognition, in particular the analysis of FACS Action Units. He recently proposed a new field of research called 'Behaviomedics', which applies affective computing and Social Signal Processing to the field of medicine to help diagnose, monitor, and treat medical conditions that alter expressive behaviour such as depression.

Dr Valstar has co-organised the first of its kind and premier Audio/Visual Emotion Challenge (AVEC) and Workshop series from 2011-2015 that advanced the field of Affective Computing.

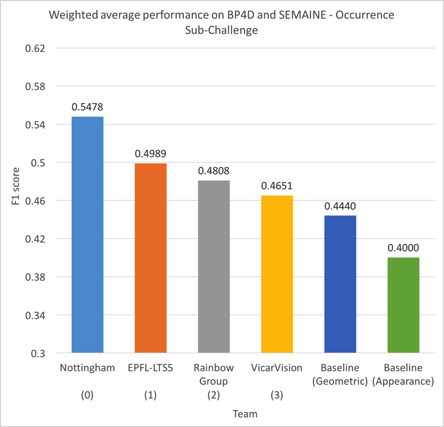

He further initiated and organised the FERA competition series (two editions up to know) - another first of its kind event in the Computer Vision community focussing on Facial Action Recognition.

He further serves as an Associate Editor for the IEEE Transactions on Affective Computing. Dr Valstar also made great efforts to contribute to freely accessible data (e.g., SEMAINE semaine-db.eu, MMI Facial Expression mmifacedb.eu, or GEMEP-FERA) and code (e.g., LAUD and BoRMan) that repeatedly advanced the field.

In the United Kingdom, he kicked off AC.UK in 2012 - a meeting of the Affective Computing community in the UK - a huge success ever since.

Lastly, his >80 highest quality publications so far led to >3600 citations (h-index = 28, source: Google Scholar).

Dr Valstar is further frequently seen on Technical Program Committees in the field.