Projects ideas

-

Below you can find several project ideas that I am happy to supervise. Nevertheless, I am open to consider your own ideas as long as they are aligned to my research interests. Please refer to my Research interests and Publications for more information.

If you wish to talk about any of these, email me on Isaac.Triguero@nottingham.ac.uk for an appointment.

Data science techniques for Smart meter data

-

I am currently working with the energy provider E.ON on a larger project that aims to transition passive costumers into active costumers by providing data science and optimisation capabilities that analyse smart meters data. There are multiple projects could be done with smart meters data including big data solutions (e.g. a Spark-based algorithm for dissagregation), recommender systems, anomaly detection and demand prediction. All of these projects involve the use of data mining to explore the data and provide a decision making tool for E.ON. We will use real data for these projects.

A visualisation tool to analyse Few shot learning:

-

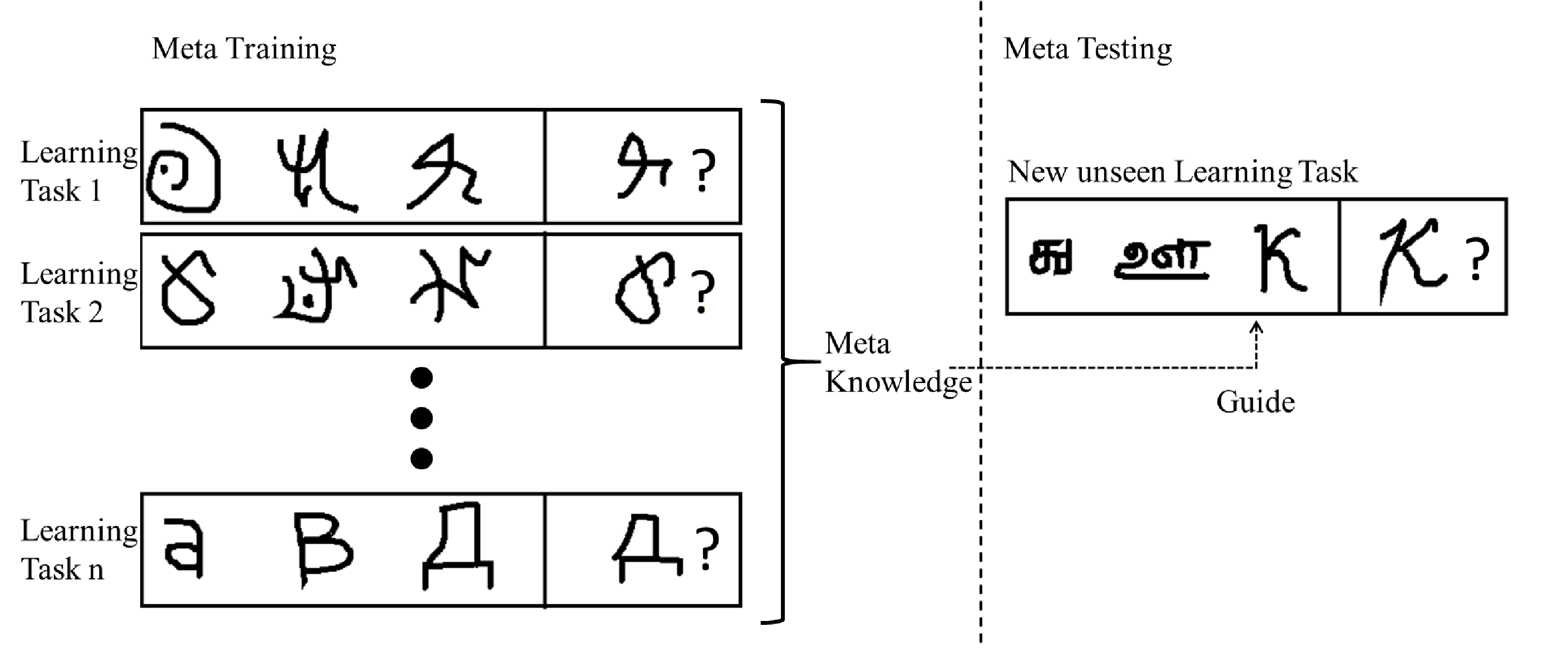

Few shot learning is motivated by the fact that human can learn new concept quickly while machine learning algorithms need a lot of examples to learn hidden patterns, especially for deep learning. Therefore, few shot learning techniques aim to learn new concept based on one or few examples.

Meta-learning is a two-level learning method that extracts meta-knowledge across different machine learning tasks and uses it to guide unseen task. This is, so far, one of the most successful approaches to tackle few shot learning problems. One successful method in this branch aims to accelerate the optimisation process of training a machine learning model based on very few examples and at the same time avoid overfitting. It uses recurrent neural networks to learn a fast and generic optimisation algorithm and also learns generic initialisation that can be quickly fine-tuned to promising model parameters for different tasks.

The idea of this project is to visualise the behaviour of this approach. You will need to implement the method and show how model parameters change towards promising ones in a few steps compared to traditional machine learning algorithms.

Label Distribution Learning for Multi-class Classification of Galaxies:

-

Learning with ambiguity is a hot topic in recent machine learning and data mining research. We can consider the learning process as a mapping from instances included in a

dataset to the label(s) characterising the problem at hand. Whereas single-label learning, where a unique label is assigned to each example, presents a great advantage in some cases

(binary classification), the most general approach of multi-label learning entails the scenario of a simultaneous belonging of the instances to a set of predefined classes (multi-class classification). In the last setting, the way we cope with the (possible) uncertainty in the label side is essential to come up with realistic models applicable to real-world problems.

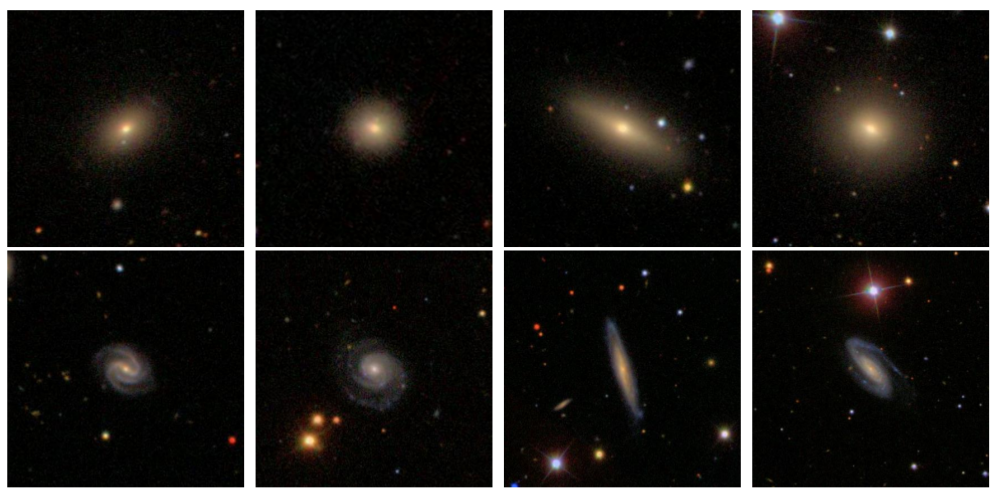

In this framework, one of the last proposals is represented by Label Distribution Learning (LDL). LDL models deal with multi-label learning with label ambiguity, where the overall distribution of the importance of the labels matters. Unless some classical approaches to multi-label classification, which aim to transform the problem in a variable number of single-label problems, LDL handles the relative importance of each label involved in the description of the instance. This novel learning paradigm is showing promising results in problems such as multi-label ranking of images, emotion recognition in face images, and multi-label classification of images as well.

In this project, you will learn about working LDL algorithms already proposed in the literature, exploring their behaviour, and potential upsides and drawbacks with respect to well established multi-class classification approaches. The goal is to apply this model to big datasets of galaxy images (from the GalaxyZoo project).

Optimisation and Machine Learning for Workforce Scheduling and Routing Problem:

-

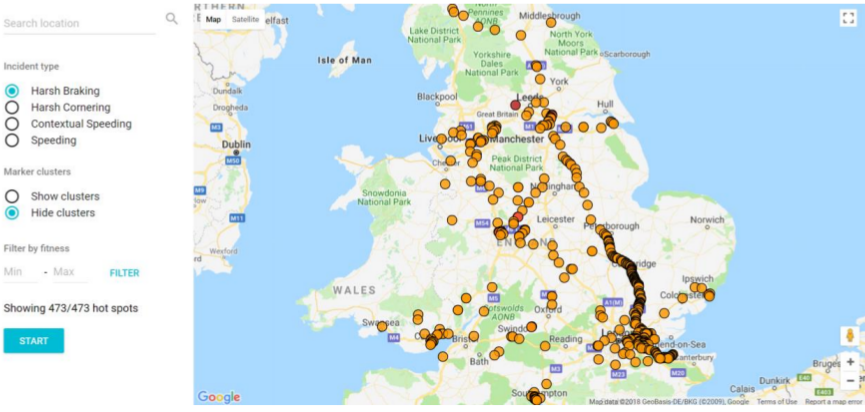

The Workforce Scheduling and Routing Problem refers to the assignment of personnel to visits to various geographical locations. Optimisation techniques, such as Genetic algorithms, can be used to solve such a problem. This could be further improve if machine learning is considered as well. When the best assignment has been found the goal is to visualise the that solution on a map to help workers see their schedules. This application can be beneficial to many employers as aservice for task management and help their personnel. See the figure below for a rough idea of what the application could look like:

Visualising the behaviour of Semi-supervised classification techniques

-

The Semi-Supervised Learning (SSL) paradigm has attracted much attention in many different fields ranging from bioinformatics to web mining, where it is easier to obtain unlabeled than labeled data because it requires less effort, expertise and time-consumption. In this context, traditional supervised learning is limited to using labeled data to build a model. SSL is a learning paradigm concerned with the design of models in the presence of both labeled and unlabeled data. Essentially, SSL methods use unlabeled samples to either modify or reprioritize the hypothesis obtained from labeled samples alone

A successful methodology to tackle the SSL problem is based on traditional supervised classification algorithms. These techniques aim to enlarge the number of labeled examples by labeling the unlabeled training points. They iteratively include their most confident predictions to the pool of labeled examples, and then they repeat the process.

The idea of this project is to visualise the behaviour of such kind of techniques. You will have to implement some basic semi-supervised models (Self-training and co-training) and show in a 2-D input dataset how the decision boundaries between classes are changing as you add newly labeled points to the training data.

Other Ideas

- Any recent competition related to data science can be used as a basis for a project. For example, you can have a look to the data mining competitions proposed in https://www.kaggle.com/.

- Visualisation tool for evolutionary models in data mining.

- Visualisation tool for big data sets using Apache Spark.

- Machine learning models in the big data context.

Previous proposals

Note that the following list of projects has been already carried out, but there is still the option of revisiting them from a different angle.Fingerprint recognition

-

Personal identification is an important issue in many fields such as criminology, forensic identifications, payments or identification in computer systems. Among all the biometric features that can be used for identification, such as voice, iris or DNA, fingerprints are the most widely used.

- You create an app that allows users to verify their identity by either using a fingerprint scanner or a picture of their fingerprint. The system must be robust to rotations, translations and deformations of the skin, and ensure that no one else is erroneously granted access.

You will be provided with some software for feature extraction and some fingerprint databases. However, the student will be responsible of the implementation of the matching algorithm.

As a result of your software, it must show the given fingerprint image along with the extracted features (See the picture below for an example) as well as a response whether the access is granted or not.

- You create a parallel program that is able to identify an individual fingerprint among a large database of fingerprints. The system must be robust to rotations, translations and deformations of the skin.

You will have access to some code in which you will base your parallelization scheme. I will be very happy if you want to explore different parallalisation technologies such as MPI or GPUs.

The resulting program should have a graphical user interface that allows us to take a given fingerprint, and shows the most similar fingerprint in the database.

The design of Automatic Fingerprint Identification Systems (AFISs) may be considered in two different settings (known as verification and identification) from which derive the following project ideas:

Data Visualisation and Generation for Machine Learning Algorithms

-

In this project, the main idea is to design a program that allows the user to contruct (draw) his own artificial dataset in an easy manner. The program must be highly flexible allowing the user to choose the number of dimensions, the kind of distribution of the data (if any), number of points (density), shapes, etc.

As a result, the user will get a dataset in CSV text file that represents the drawn figure. The software has to be then linked to some classification algorithms (you can use scikit-learn implementations, weka, R, etc) that label the given dataset. Then, the decision boundaries between classes can be represented (See figure below).

Vehicle Incident Hot Spots Identification

-

Transportation research mostly aims at establishing the means for improving driving performance, economy and safety. Logistics complexity coupled with large transport

networks has required the widespread use of sensors, tracking devices, and mobile communication equipment in order to enable such developments. These devices constantly gather information of vehicles and their journeys. This includes, for instance, safety hazards, vehicle diagnostics and driving behaviour. Given the velocity by which large volumes of data are produced, the challenge is to establish effective

tools for fast processing and analysis so that the information can be employed by transport stake holders in a timely manner.

The aim of this project is to come up with a big data algorithm (based on Spark Streaming) to identify Hot Spots for traffic incidents and accidents on very large datasets.

Graph-based semi-supervised learning in Spark

-

This project will be focused on the exploitation of few amounts of annotated data and a great number of unannotated samples within a semi-supervised big data scheme. More specifically, we want to extend the capabilities graph-based SSL models to the big data context. Despite ther performance, when it comes together with big datasets (large number of examples and/or features), current approaches become non effective and non efficient due to the big dimension of the problem.

In this project, you will learn some big data learning technologies such as Apache Spark to explore the use of different strategies to design graph-based SSL for big datasets.